Qualcomm Launches AI Chips for Data Centers

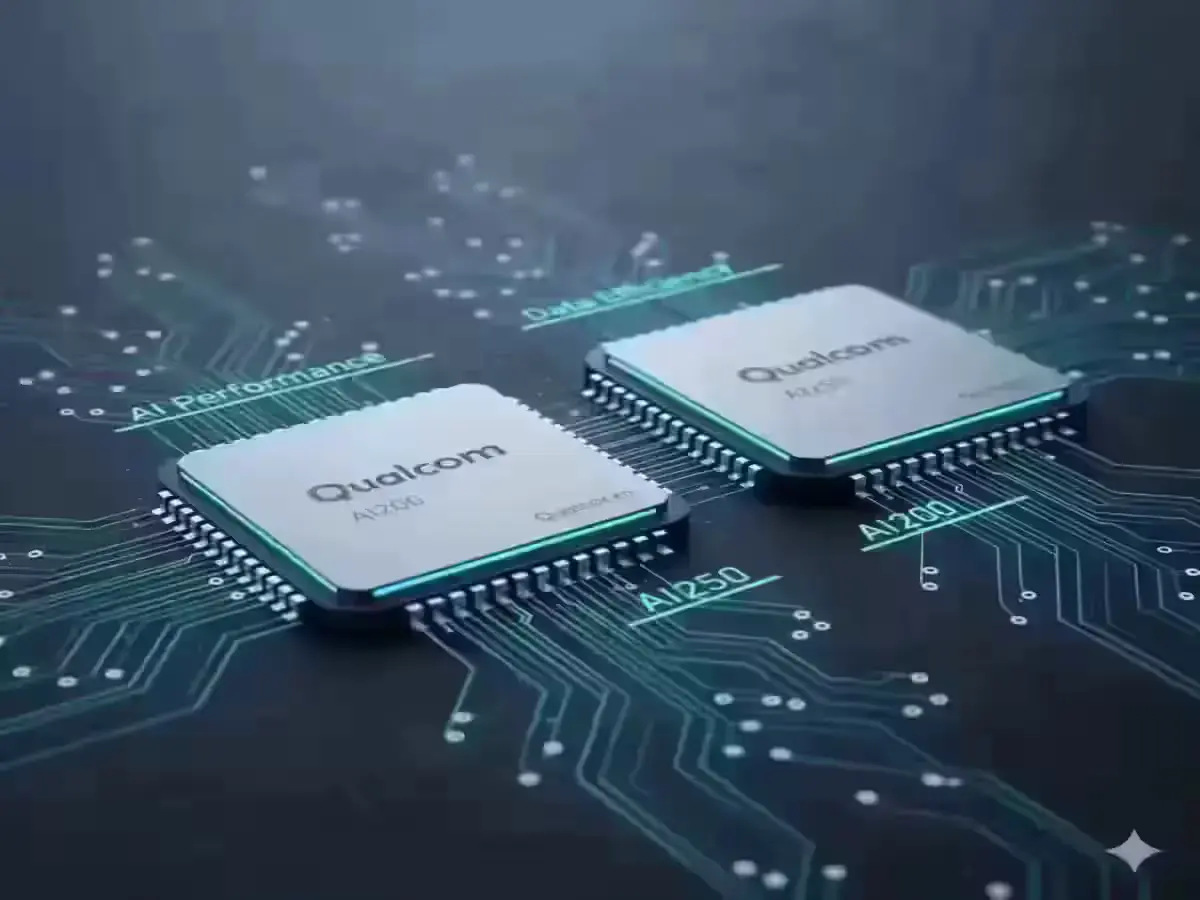

Qualcomm has made a big move in the AI world by announcing its AI200 and AI250 chips, officially entering the AI data center market. The company, best known for mobile processors, is now targeting large-scale AI servers — a space dominated by Nvidia and AMD. This announcement has already drawn investor interest and had an impact on the qcom share price.

What’s New in Qualcomm’s AI Chips

The new Qualcomm AI chips are built to handle data-center-grade inference workloads efficiently. The AI200 and AI250 chips are designed with a focus on power efficiency, scalability, and cost optimization. Qualcomm claims these chips can deliver strong AI performance while consuming less energy — a growing concern for large data centers that need sustainable solutions.

These chips use advanced architecture optimized for running models like Humain and other AI-based systems, showing Qualcomm’s shift from smartphone hardware to high-performance AI infrastructure. The design reportedly integrates advanced neural processing units (NPUs) and specialized accelerators tuned for machine learning inference tasks.

Features and Specifications

- Chip Models: AI200 and AI250

- Focus: AI inference, low power consumption, scalability

- Architecture: Custom neural cores with dedicated AI engines

- Use Case: Data centers, enterprise AI workloads, and edge cloud systems

- Performance: Optimized for large language models and generative AI tasks

Price and Availability

Qualcomm hasn’t shared official pricing yet, but early reports suggest that these chips are expected to cost significantly less than high-end Nvidia GPUs. This move could attract data-center operators looking for efficient, budget-friendly AI solutions. The release date is expected to roll out in early 2026, with test units already sent to major partners and cloud providers.

Design and Market Strategy

The AI200 and AI250 are compact, modular, and built to scale across different server configurations. Qualcomm aims to use its long experience in low-power mobile chip design to deliver AI chips that are both cost-effective and energy-efficient. The company also plans to partner with key cloud service providers to expand adoption globally.

Impact on Nvidia, AMD, and qcom Share Price

Industry analysts believe Qualcomm’s entry will increase competition in the AI chip segment. While Nvidia remains the market leader in training chips, Qualcomm’s focus on inference and power efficiency gives it a unique angle. The qcom share price showed a positive reaction after the announcement, reflecting growing confidence in the company’s AI strategy.

Reviews and Early Reactions

Tech reviewers and early partners have praised Qualcomm’s approach to balancing efficiency and performance. While it’s too early to compare benchmarks directly, initial testing suggests that Qualcomm’s chips could help reduce AI infrastructure costs by up to 40% for certain inference tasks.

Disclaimer

This article is for informational purposes only and does not constitute investment advice. Investors should perform their own research before making financial decisions involving Qualcomm or related companies.

FAQs

What are Qualcomm’s new AI chips?

The AI200 and AI250 are Qualcomm’s first data-center AI chips, designed to handle inference workloads efficiently.

How will these chips affect Nvidia and AMD?

Qualcomm’s entry introduces more competition, particularly in the inference and low-power AI markets dominated by Nvidia and AMD.

When will Qualcomm’s AI chips be released?

Official launch for commercial use is expected in early 2026, with initial samples already distributed to partners.

Does this affect qcom share price?

Yes. Qualcomm’s stock saw a short-term boost after the AI chip announcement due to investor optimism about its new market strategy.